Discovering Physics …

If seeing is believing, then measuring is knowing

Seeing versus measuring

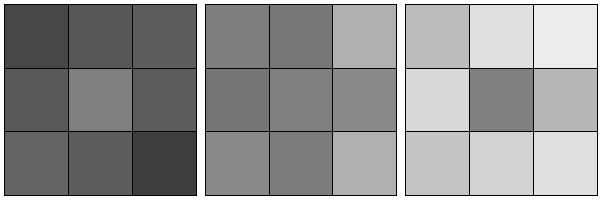

Imagine looking at an ordinary image, perhaps of people at an interesting place and/or time, a landscape that is breathtaking, or maybe a painting that weaves together bold colours in a visually arresting way. You might think about the context of the subjects in the image, their histories and futures, or why this image was taken. Less often do we quantify the relative contrasts perceived in an image in any meaningful way. In fact, there are simple visual examples that prove that our visual system is terrible with such quantitation (Figure 1). As a result, our vision performs poorly in very low light conditions (even with adaptation). Unlike a camera capable of a long exposure imaging, you might not see details in a dimly lit street even if you stared long and hard at it. One might blame this on the fact that our vision is not quantitative, hence our brain does not ‘add’ disparate images that we see together to produce less noisy ‘long exposure’ images.

Put simply, our eyes can see but our brains do not measure.

Imaging nanometer-size particles relies heavily on quantitation and computation

The optics and technology around imaging biomolecules and nanoparticles have advanced tremendously over the last century. We are now able to see atoms, molecules, nanomachines and even infer the chaotically dynamic dance that they endure. In the early days of microscopy, imaging such nanometer-size objects were guided by human’s capabilities in feature detection and hence remained heuristic. Increasingly, however, these images are quantised and digitised to allow image ‘arithmetic’, segmentation, feature detection and inference that are far from what human vision is capable. So whether you like it or not, modern high-resolution imaging of nanometer-size objects almost always contains a trail of computational algorithm(s).

Importance of coherent imaging in transmission electron microscopy of weak phase objects

Although our group works on both x-ray and electron-based imaging techniques, here we restrict the discussion to electron microscopy.

First, we have to understand a useful characteristic of the electrons used in modern imaging: coherence. Consider imaging electrons streaming down a transmission electron microscope that are trained on a nanometer-size object. Quantum mechanics tells us that these electrons propagate from the electron gun as a wavefield down the microscope column, through the sample, and through a series of focusing lenses and finally onto an electron detector. Now if these electrons have only a small spread of energies (i.e. practically monochromatic), then these electrons propagate largely more or less like a plane or spherical wave. A neat property of such simple and monochromatic wavefunctions is that they are coherent and propagate in free space in a fairly predictable and mathematically straightforward fashion. As a result, if you measure the complete complex-valued electron wavefunction on a particular focal plane, you can computationally re-propagate this wave to other focal planes of your choosing. However, if you randomly insert small random phase fluctuations to this wavefunction both in space and time, the wavefield loses its coherency and this computationally re-propagated wave becomes spatially blurred (i.e. loss in resolution).

Second, let’s look at what information is encoded in this complex-valued exit wave. As an electron wave passes through a nanometer-size sample, the features of the sample are encoded in the subtle phase shifts that they impart onto this incident electron wave. If this occurs, we typically call this sample a “weak phase object”. In other words, if you measure the complete coherent electron wavefunction at the detector, you can recover the complete wavefunction just as it exits your sample (a.k.a. the exit wave).

Missing phase information

Now there is a common misconception that “phases are measured” in electron microscopy since images are collected instead of their diffraction intensities. In fact, even our most advanced electron detectors only measure the squared amplitude of electron wavefunctions regardless of detection in either the image plane or diffraction plane. To oversimplify things a little here, if each point in the wavefunction can be represented by a complex 2D vector then we only measure this vector’s magnitude (amplitude) and not its direction (angle or phase).

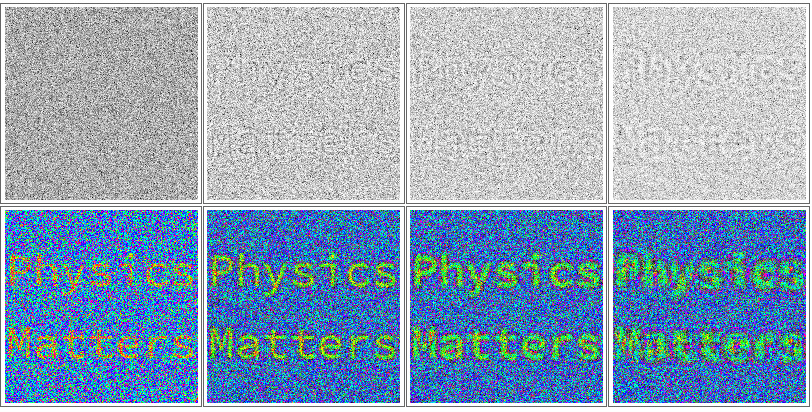

Essentially, current detectors only measure the intensity of interference patterns obtained by mixing these phase shifts. To enhance the contrast of small particles without these phases, we typically tune our microscopes to form highly defocused images that increase this phase mixing. This phase mixing can dramatically alter the probabilities for electrons to reach different parts of our detector (in exactly the same way that intensity nodes form in inference patterns). These altered probabilities increase the contrast variations in an image (top row of Figure 2).

Imaging at high defocus, however, introduces aberrations at the expense of resolution. Another option to enhance this interference contrast is to introduce a phase difference between the scattered and unscattered electron wavefunctions using a phase plate. However, because the electrons are highly ionising, electron phase plates age quickly with exposure, creating inconsistent imaging conditions which complicates signal averaging between different images and lowers the overall usability and effectiveness of electron phase plates. Notably, these two commonly used contrast enhancement options above do not retrieve the phases of the electron wavefunction.

Computational phase retrieval for low dose imaging

Suppose now we had a way of reconstructing the complete complex-valued wavefield at the detector (i.e. retrieve the phases of the electron wavefunctions as well), then for electrons that are highly coherent, we can also computationally recover the complex-valued exit wave just after the sample. In fact, we can also computationally remove aberrations introduced by optical elements in the microscope, if these can be quantitatively calibrated.

By dressing images of the electron wavefunctions passing through the sample objects with the correct phases, we can extract higher contrast information of nanometer scale objects at very low electron doses (bottom row of Figure 2). Since high resolution imaging with fast electrons is fairly destructive to these small objects, phase retrieval allows us to mitigate the sample damage problem that haunts electron microscopy—are you seeing your sample in its native state or a terribly damaged version?

The future

My group is working out how to statistically infer the weak phases of nanometer size objects even when their electron micrographs are extremely noisy. Indeed, this project is technically challenging and combines ideas from several fronts: forward modeling with computational electron optics, statistical inference from known scattering and detection physics, and unsupervised machine learning from comparing images taken of similar objects.

Digitising images from our microscopes mean that, unlike human vision, microscopes already measure rather than merely see. Ultimately, we would like to create efficient algorithms that provide visual intelligence for our microscopes, much like how our visual cortex augments the basic detection capabilities of our eyes. My hope is that in the future, when you and I buy microscopes it also comes with a “brain”!